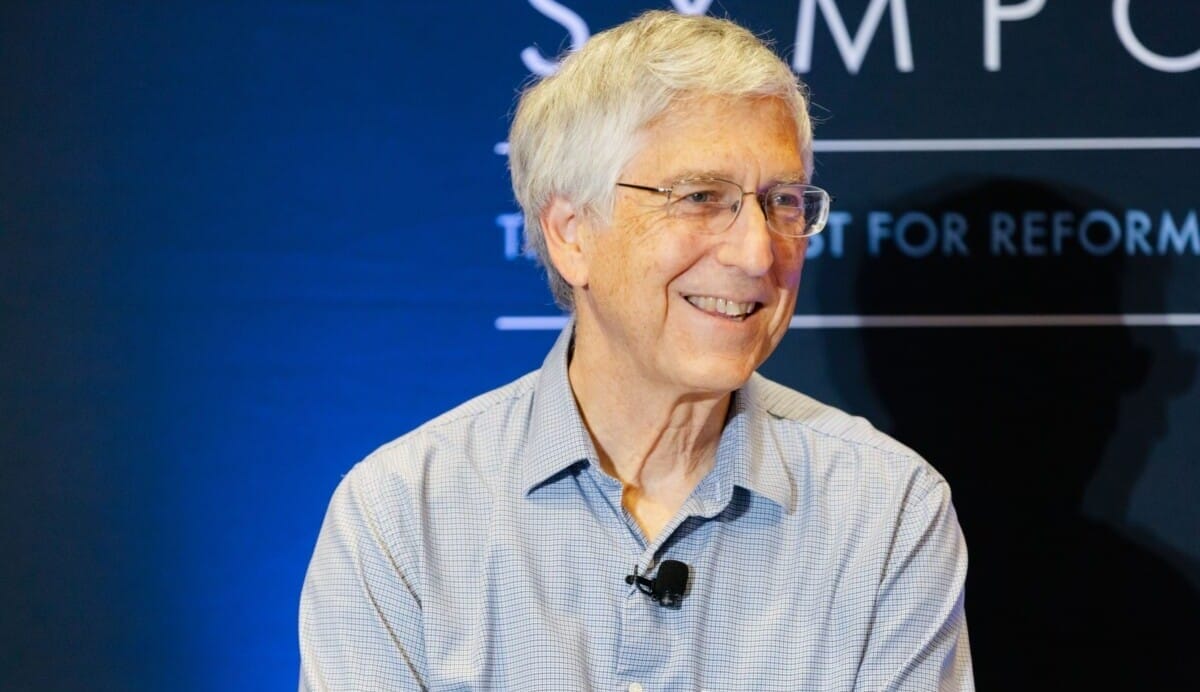

Mark Horowitz, a leading computer scientist and electrical engineer at Stanford University, has declared that Moore’s Law is “basically over”, which will have significant ramifications for artificial intelligence investors who are counting on more computing power to feed into more complex models.

It is a view shared by Nvidia CEO Jensen Huang, who pronounced the law “dead” in 2022 while justifying a hike in chip prices.

The law refers to the observation that the number of transistors on an integrated circuit doubles every two years while the costs decrease. That’s an economic factor that has driven the semiconductor industry, said Horowitz, who is chair of the department of electrical engineering and professor of computer science at Stanford University.

“If I have a product and I’m selling it in high volume, when I move to the next generation technology, it’ll become cheaper to produce, therefore I will make more money, or it will prevent my competition from underselling me. We can then create either the same parts we have now cheaper, or we can build even better parts at the same price point,” he told the Top1000funds.com Fiduciary Investors Symposium.

“We still are scaling technology. We’re still building more advanced processing nodes. We’re cramming more transistors per square millimetre, but unfortunately, the transistors are not getting cheaper.”

Machine learning scaling rules state that bigger models tend to mean better performance, which is why the dismantling of Moore’s Law and sluggish computing power growth could be a roadblock for AI development, Horowitz said.

“This is a major disruption, because our expectation is that we can do more computing and we’re pushing more stuff to the cloud,” he said.

“Now Moore’s law is actually flat, so all that [AI model] complexity is going to have to be done through sort of algorithms or applications… if we think about the economics, where is money going to be made?”

Horowitz is of the view that most companies are losing money on their AI projects. “All the hyperscalers in the world [like Google, Meta, Amazon and Alibaba] are spending enormous amount of money trying to protect some of the business they have because they’re worried about losing it,” he said.

He suggested the profitability of an AI application hinges on two things: the service cost and the liability cost. The latter would be increasingly pronounced as the world moves towards agentic AI applications as service providers will need to start taking responsibility for decisions and suggestions of their AI agents.

Taking these costs into account, Horowitz said it is highly likely that profitable AI models or applications in the future will not be large, but small.

“Both of those, to me, indicate that what you’re going to try to do is reduce the scope of the model to something in a particular area, so you can make it cheaper to serve and less likely to make a bad thing,” he explained.

“If that’s true, then the people who are going to start making money are not the people who are using the really big models. It’s the people who have used the big models to create smaller models or more domain specific models.”

These views are not pessimistic predictions for the AI space, Horowitz said, adding that he is optimistic about models which can find useful and specific applications.

“I do think there’s going to be some big craters, because there’s been billions of dollars invested in a lot of companies, and I’m not sure they’re going to be the people who survive,” he said.

“I have no doubt that machine learning is going to change the world. The world’s going to be very different. Who’s going to be the player there, I think, is still to be decided.”